Comments 7 Competition Notebook. Execute this code replace service name with the name of your Azure Synapse Analytics Workspaces.

Parquet Data File To Download Sample Twitter

When it comes to storing intermediate data between steps of an application Parquet can provide more advanced capabilities.

. Each area will fit into memory of a standard work station with RAM of 32GB. Configuring the size of Parquet files by setting the storeparquetblock-size can improve write performance. If you need example dummy files for testing or demo and presentation purpose this is a great place for you.

The download consists of a zip containing 9 parquet files. All files are free to download and use. Optiver Realized Volatility Prediction.

This file is less than 10 MB. - kylouserdata1parquet at master Teradatakylo. In this case you are only going to read information so the db_datareader role is enough.

I have made following changes. Give Azure Synapse Analytics access to your Data Lake. We care for our content.

The trip data was not created by the TLC and TLC makes no representations as to the accuracy of these data. The easiest way to see to the content of your PARQUET file is to provide file URL to OPENROWSET function and specify parquet FORMAT. History 2 of 2.

Sample Parquet data file citiesparquet. Removed registration_dttm field because of its type INT96 being incompatible with Avro. That is you may have a directory of partitioned Parquet files in one location and in another directory files that havent been partitioned.

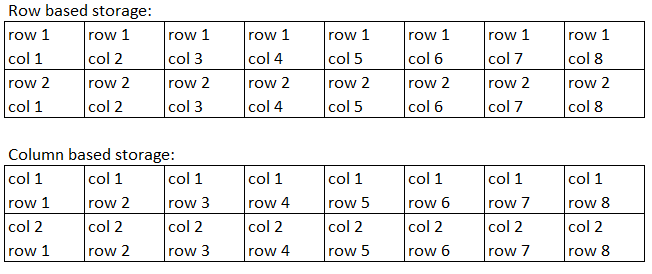

If the file is publicly available or if your Azure AD identity can access this file you should be able to see the content of the file using the query like the one shown in the following example. Apache Parquet is an open source column-oriented data file format designed for efficient data storage and retrieval. Working with Parquet.

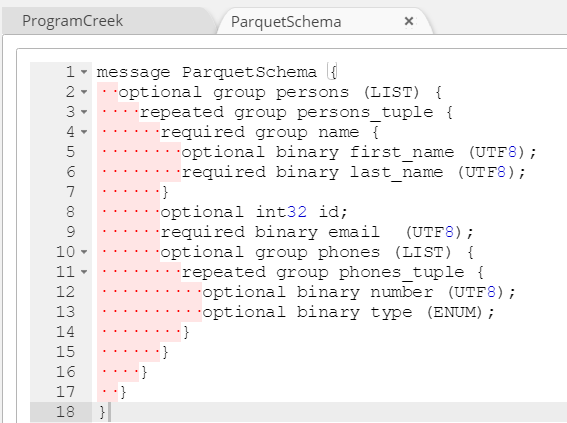

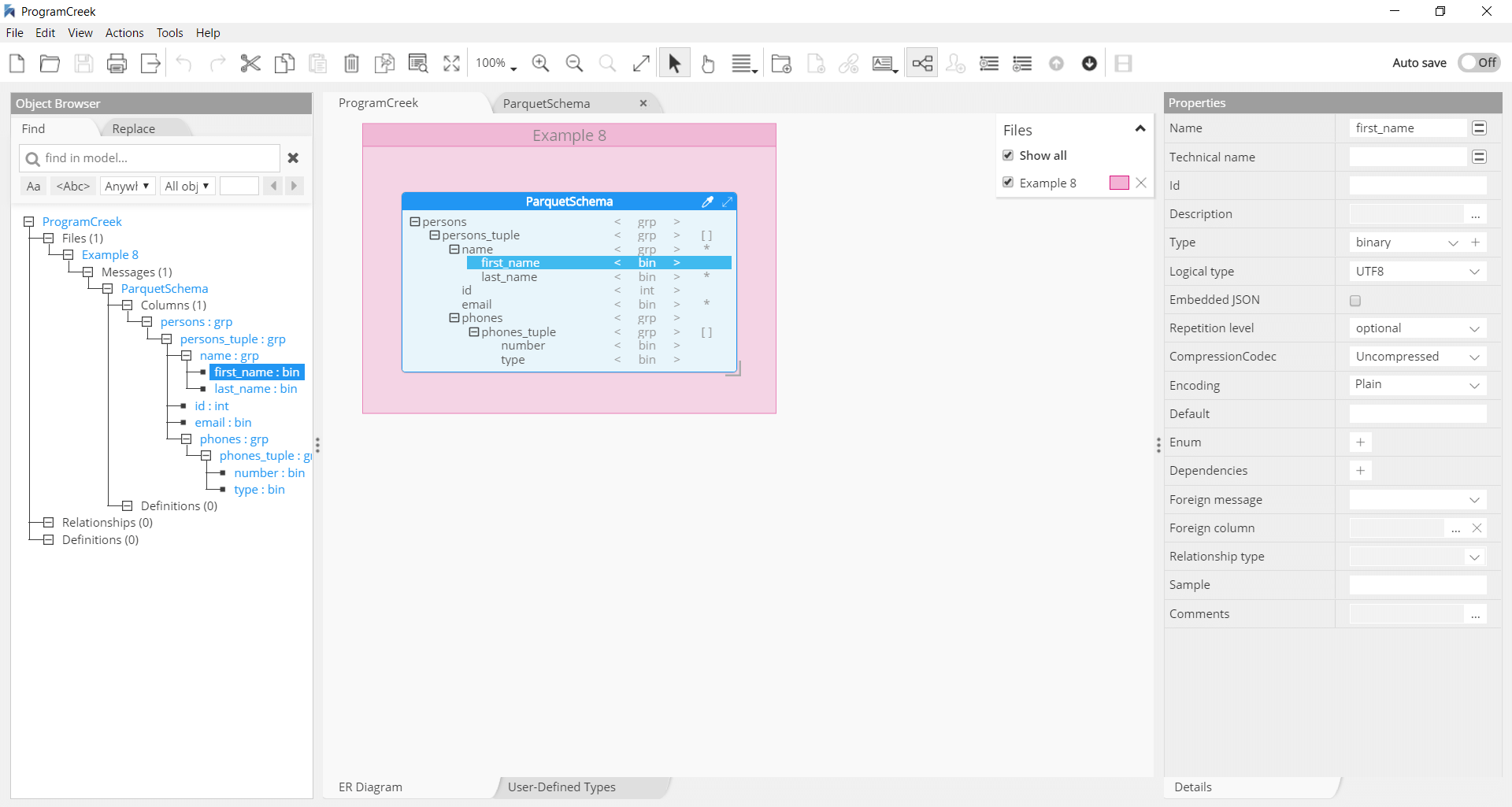

Support for complex types as opposed to string-based types CSV or a limited. Then copy the file to your temporary folderdirectory. The files might be useful for testing upload HTML5 videos etc.

Next you are ready to create linked. File containing data in PARQUET format. View the original dataset location and the.

Output_date odbget_data dataset_path date-parquet access_keyaccess_key Get the Prescribing Chemical data file. The data was collected and provided to the NYC Taxi and Limousine Commission TLC by technology providers authorized under the Taxicab Livery Passenger Enhancement Programs TPEPLPEP. Using the Parquet file format with Python.

Download the entire SynthCity dataset split in 9 geographical areas. This Notebook has been released under the Apache 20 open source license. Get the Date data file.

If nothing happens download GitHub Desktop and try again. Kylo is a data lake management software platform and framework for enabling scalable enterprise-class data lakes on big data technologies such as Teradata Apache Spark andor Hadoop. All files are safe from viruses and adults-only content.

In the future when there is support for cloud storage and other file formats this would mean you could point to an S3 bucked of Parquet data and a directory of CSVs on the local file system and query them together as a single dataset. First give Azure Synapse Analytics access to your database. It provides efficient data compression and encoding schemes with enhanced performance to handle complex data in bulk.

The format is explicitly designed to separate the metadata from the data. Kylo is licensed under Apache 20. Basic file formats - such as CSV JSON or other text formats - can be useful when exchanging data between applications.

The larger the block size the more memory Drill needs for buffering data. Contributed by Teradata Inc. The block size is the size of MFS HDFS or the file system.

Readers are expected to first read the file metadata to find all the column chunks they are interested in. Open an Explorer window and enter TEMP in the address bar. Subsituted null for ip_address for some records to setup data for filtering.

Parquet is available in multiple languages including Java C Python etc. This repository hosts sample parquet files from here. To get and locally cache the data files the following simple code can be run.

If clicking the link does not download the file right-click the link and save the linkfile to your local file system. This is what will be used in the examples. Parquet files that contain a single block maximize the amount of data Drill stores contiguously on disk.

Spark - Parquet files. Contribute to MrPowerspython-parquet-examples development by creating an account on GitHub. This allows splitting columns into multiple files as well as having a single metadata file reference multiple.

The columns chunks should then be read sequentially. If nothing happens download GitHub Desktop and try again. Click here to download.

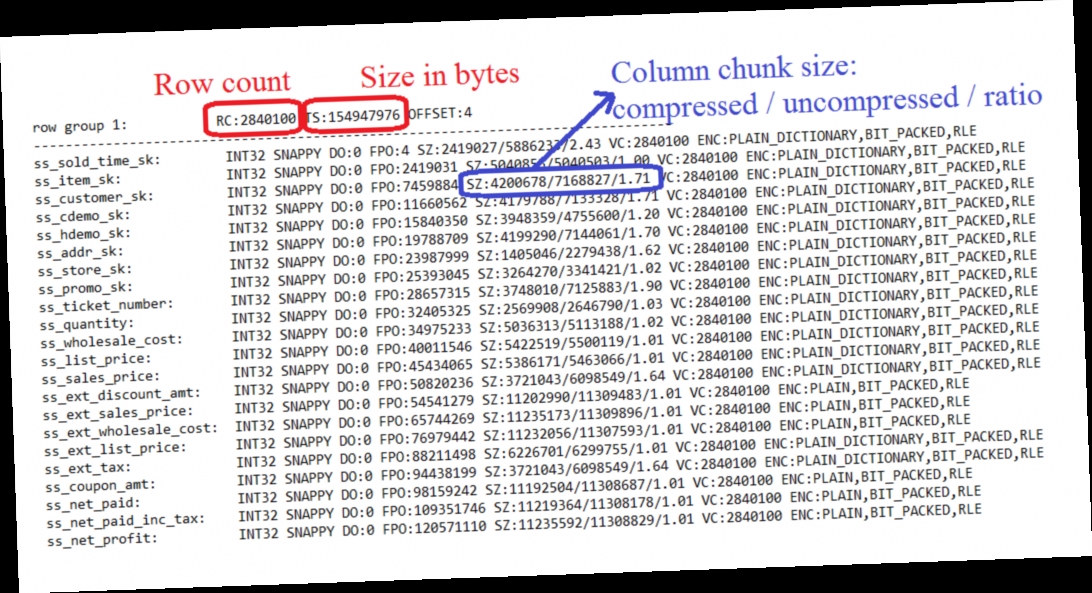

Diving Into Spark And Parquet Workloads By Example Databases At Cern Blog

Convert Csv To Parquet File Using Python Stack Overflow

The Parquet Format And Performance Optimization Opportunities Boudewijn Braams Databricks Youtube

Chris Webb S Bi Blog Parquet File Performance In Power Bi Power Query Chris Webb S Bi Blog

0 comments

Post a Comment